Evaluating an Online Training Course to Develop and Sustain Cancer Navigation and Survivorship Programs

Objectives: To evaluate the impact of the Executive Training on Navigation and Survivorship (Executive Training) online training course, designed by the George Washington University Cancer Center, on nurses and other healthcare professionals.

Sample & Setting: A volunteer sample of 499 healthcare professionals, including nurses and patient navigators, were recruited through multiple Internet-based channels.

Methods & Variables: Participants completed questionnaires before and after each module and at the end of the training. Descriptive statistics were calculated, and paired t tests were used to assess pre- and post-test learning confidence gains for each module. Qualitative feedback from participants was also summarized.

Results: From pre- to post-test, each group demonstrated statistically significant improvements in confidence (p < 0.05) for all seven training modules. Confidence gains were statistically significant for 19 of 20 learning objectives (p < 0.05). Overall rating scores and qualitative feedback were positive.

Implications for Nursing: The Executive Training course prepares healthcare professionals from diverse backgrounds to establish navigation and survivorship programs. In addition, the training content addresses gaps in nursing education on planning and budgeting that can improve success.

Jump to a section

More than 16.9 million people in the United States are living with a history of cancer (Miller et al., 2019). Because of a growing and aging population, as well as improvements in screening and treatment, this number continues to increase. According to Miller et al. (2019), the estimated number of survivors in the United States is predicted to rise to as many as 22.1 million people by 2030.

Background

Patient navigation and evidence-based survivorship guidelines aim to address health disparities in cancer care and to improve overall quality of life for cancer survivors. Patient navigators can provide culturally affirming communication, refer patients to additional resources, and troubleshoot barriers to timely, coordinated cancer care (Freeman, 2012). The Commission on Cancer standards require accredited cancer programs to include a patient navigation process and provision survivorship care plans (American College of Surgeons, 2012). In addition, survivorship care plans have been implemented as a strategy to improve care coordination and long-term follow-up care for survivors who have transitioned out of active cancer treatment (Salz & Baxi, 2016). According to a report from the Institute of Medicine (Hewitt, Greenfield, & Stovall, 2006), a variety of cancer survivorship care models have emerged that are coordinated by diverse clinicians, such as oncologists, advanced practice nurses, physician assistants, and primary care providers (Halpern et al., 2015; McCabe, 2012; Mead, Pratt-Chapman, Gianattasio, Cleary, & Gerstein, 2017; Rosenzweig, Kota, & van Londen, 2017; Spears, Craft, & White, 2017). Clinical guidelines for survivorship care have also been established for certain tumor types and treatment side effects (American Cancer Society, 2018; American Society for Clinical Oncology, n.d.; National Comprehensive Cancer Network, 2019). Despite the establishment of survivorship care guidelines, maintaining sustainable financing for patient navigation and cancer survivorship programs remains a substantial issue in many settings.

Program leaders continue to experiment with how to design sustainable patient navigation and survivorship care programs that best meet the needs of patients and their family caregivers (McCabe, 2012). However, literature that can help to guide program leaders in developing or implementing patient-centered programming is limited. Challenges for navigation programs may include patient recruitment, navigator training, intensive services and patient contact, and data collection (Wells et al., 2011). Careful planning, community engagement, strong community partnerships, ongoing process monitoring, and flexibility to modify the program have been cited as success factors for existing navigation programs (Steinberg et al., 2006). DeGroff, Coa, Morrissey, Rohan, and Slotman (2014) have offered guidance for navigation program development, suggesting that setting program goals, identifying navigator responsibilities, training navigators, and evaluating the program are key considerations. Additional challenges for survivorship programs include a lack of program flexibility; patient identification and risk stratification; and issues with sustainability and institutionalization stemming from low revenue, amount of staff effort, and time needed to yield organizational change (Jefford et al., 2015). According to Kirsch, Patterson, and Lipscomb (2015), sustainable funding, workflow optimization, technology integration, and technical assistance improve sustainability of survivorship programs.

The purpose of this study was to evaluate the effectiveness of the online Executive Training on Navigation and Survivorship (Executive Training) course based on self-reported confidence with learning outcomes from pre- to post-test and satisfaction ratings. A subanalysis of nurse participants and qualitative feedback on the training from participants are also reported.

Methods

Design

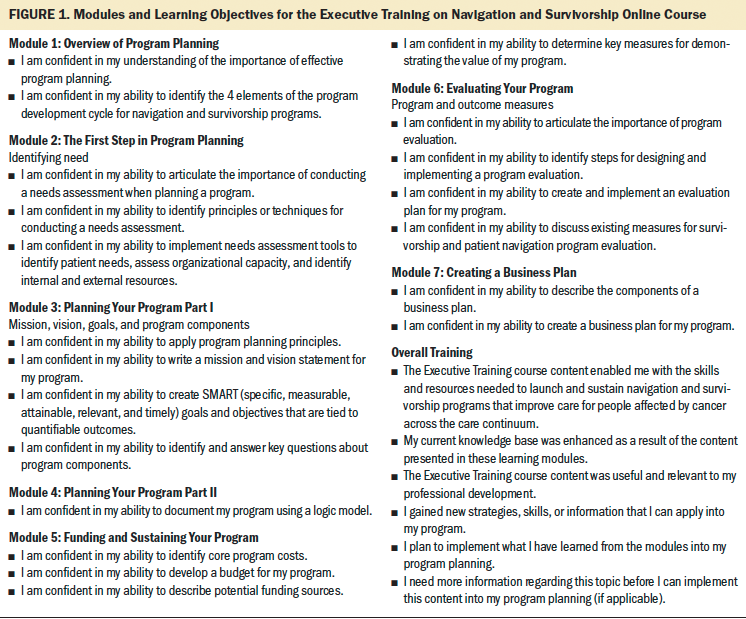

The Executive Training online course was designed by the George Washington University (GW) Cancer Center to bolster the business acumen of program leaders tasked with developing patient navigation and cancer survivorship programs. In alignment with program planning frameworks, such as those proposed by Healthy People 2020 (U.S. Department of Health and Human Services, 2019), the Centers for Disease Control and Prevention ([CDC], 2013), and nursing processes at the individual level (American Nurses Association [ANA], n.d.), training content was developed around four steps: assess, plan, implement, and evaluate. As many as 100 program leaders from across the United States participated in live training sessions that were offered from 2010 to 2012. As a result of positive, longitudinal feedback from the initial live training, the GW Cancer Center proposed creating an online version of the training to reach a broader group of learners. The curriculum was refined and adapted for a self-paced online course through a CDC cooperative agreement (DP13-1315). The on-demand course, which launched in December 2014, is housed within the GW Cancer Center’s Online Academy (https://go.gwu.edu/gwcconlineacademy). The overall goal of the training is to increase participants’ ability to develop, implement, and sustain patient-centered survivorship programs across diverse settings. The Executive Training consists of seven learning modules with specific learning objectives (see Figure 1).

Each module has an interactive 20-minute audiovisual presentation. Executive Training learners can download the Guide for Program Development (http://bit.ly/ExecTrainGuide), which summarizes research, guidelines, care standards, best practices, case studies, and practice tools. In addition, the Program Development Workbook (http://bit.ly/ExecTrainWorkbook) includes activities that help participants to apply what they have learned and to create their own customized program plan. Although learners are required to complete each module in sequence, they can complete the modules at their own pace across several sittings. Access to the course is not limited; however, facilitators follow up with inactive learners on a quarterly basis to check whether they intend to complete the course.

Sample and Setting

The Executive Training course was promoted through GW Cancer Center websites, email distribution lists, and professional organization channels. A convenience sample of participants (N = 906) voluntarily enrolled in the training course from December 2014 to January 2017. At the time of the writing of this article, some participants had not completed the training in its entirety; therefore, sample sizes varied across the seven modules. Participants were counted in a training module sample if they had completed pre- and postevaluation questions for that module. Ninety participants were excluded because of incomplete evaluation data. Because the diversity of oncology training and healthcare services at the international level made the interpretation of learning outcomes difficult, an additional 10 participants practicing outside of the United States were excluded. Demographic data were missing for 307 participants, resulting in a final analytical sample of 499 who completed evaluation questions for at least one learning module.

Data Collection

Data were collected to evaluate and improve the course. Participants voluntarily enrolled in the course and completed evaluation questionnaires; therefore, based on GW institutional review board guidance, this study did not fall under human subjects research and learners were not asked to provide informed consent.

At the start of the Executive Training course, participants answered questions on a brief background survey. Demographic variables included age, race, ethnicity, geographic location, profession, specialty, and practice setting. To assess exposure to past training, participants were asked, “Have you taken other courses or completed additional training in this topic area?”

Design of the Executive Training evaluation was informed by Kirkpatrick’s Evaluation Model (Kirkpatrick & Kirkpatrick, 2006; Reio, Rocco, Smith, & Chang, 2017), which proposes four levels of training assessment: participant reaction (e.g., training satisfaction), learning (e.g., increased knowledge or confidence), behavior (e.g., job performance), and results (e.g., organizational changes). Participants enrolled in the Executive Training course completed several questionnaires designed to assess their reactions to the course and learning outcomes. The questionnaires were internally developed at GW and are not part of a validated scale.

Participant learning was assessed through pre- and post-test questions specific to each module’s objectives. Each module included one to four questions that measured confidence on learning objectives using a five-point Likert-type scale ranging from 1 (strongly disagree) to 5 (strongly agree). Questions were posed at pretest and repeated verbatim during post-test once participants completed the module content.

Participants who completed the seventh module were asked to complete a general evaluation questionnaire on overall training effectiveness and satisfaction, which assessed their reactions and behavioral intentions. To inform future training improvements, participants were asked to provide feedback and suggestions in open-ended comment boxes following each module and in the general evaluation upon completion of the entire training.

Data Analysis

Participant data were imported into Stata/IC, version 14.2, for cleaning and analysis. Descriptive statistics were obtained for demographic variables. Summary means were calculated for each module’s pre- and post-test scores. Dependent sample t tests were used to evaluate the statistical significance (p < 0.05) of pre- and post-test confidence gains on learning objectives. General evaluation responses were summarized using percentages. One hundred and sixty-two responses from open-ended feedback questions were aggregated into a Microsoft® Excel spreadsheet and reviewed for general tone (positive or negative) and recurring content. More rigorous qualitative analysis was not possible because of the limited number of participants who opted to provide comments and the brevity of available comments.

Participants were able to choose multiple professional roles on the demographic survey. Respondents who identified as any type of nurse were included in a nurse subset. All others were considered to be part of a non-nurse group of participants. Chi-square tests were conducted to examine specialty and training differences among nurse and non-nurse participants. Module averages and confidence gains from pre- to post-test for nursing participants were summarized separately from the full sample. For each module, pre- to post-test changes for nurses were compared to those of non-nurses using independent samples t tests.

The final analytical sample size was large enough to detect at least a medium effect size with 80% power and a type I error rate of 5%. Parametric tests were used because they have been proven to be robust against violations of statistical assumptions without being overly conservative (Norman, 2010). The stability of findings were confirmed by replicating analyses using equivalent nonparametric tests and obtaining similar findings.

Results

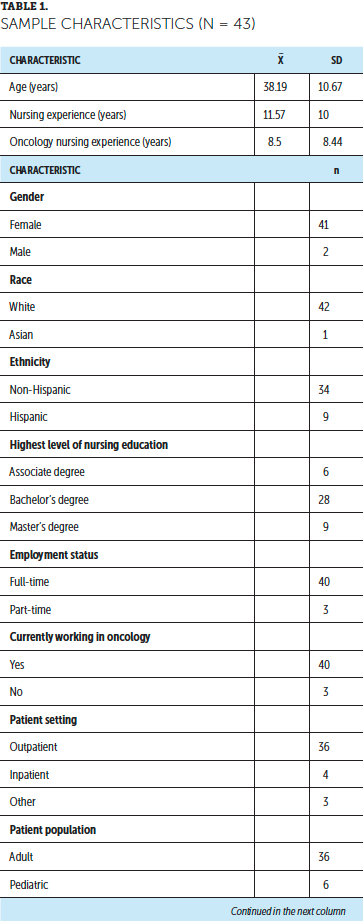

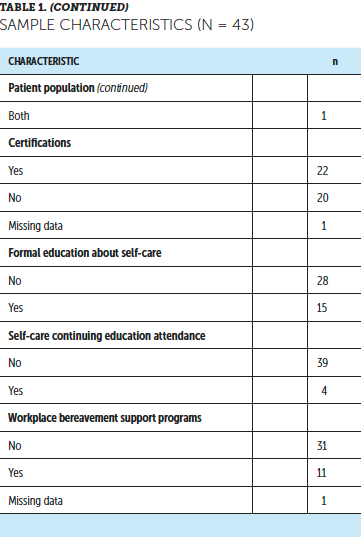

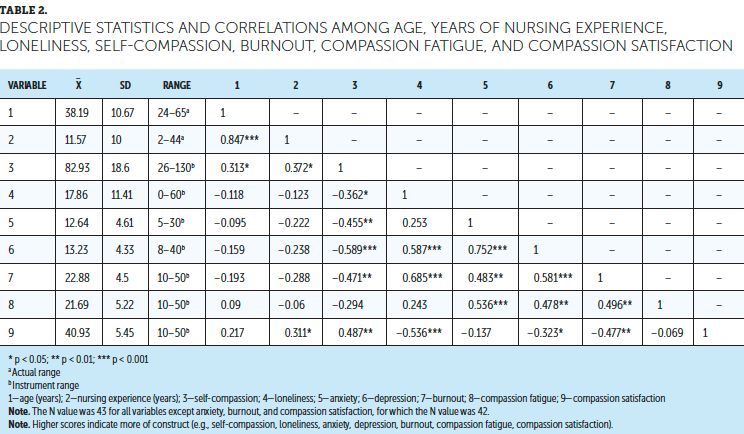

The majority of participants identified as female, White, and non-Hispanic (see Table 1). Participants varied in age, with the majority being aged 40–59 years. Learners who self-identified as nurses (n = 263) included overlapping identifications as RNs, nurse navigators, or nurse practitioners. Non-nurse participants included patient navigators, administrators, social workers, health educators, and physicians or physician assistants. Most learners indicated an oncology specialty, with the remainder indicating internal medicine, family medicine, or other clinical areas. Two hundred and ninety-eight participants completed all seven modules. Because participants enrolled on a rolling basis and completed modules at their own pace, the remaining 201 participants were at various stages of progress during the evaluation. It is unknown whether these individuals completed the remaining modules or dropped out of the training.

In the full sample, mean self-rated confidence scores on learning objectives at post-test were significantly higher than at pretest (p < 0.0001) across all seven modules (see Table 2). Mean module ratings ranged from 3.1 to 3.5 at pretest and from 3.8 to 4.3 at post-test, indicating improvement from a rating of neutral confidence (3) to agree (4). Mean pre- to post-test confidence gains ranged from 0.6 (module 5) to 1 point (module 7). Average post-test scores were higher at a statistically significant level for 19 of 20 individual learning objectives. The module 5 learning objective, “I am confident in my ability to describe potential funding sources,” was the exception.

As a whole, nurses disproportionately reported an oncology specialty (81%), with no past training in designated topic areas (69%), compared to non-nurse participants (59% and 57%, respectively); chi-square tests revealed that these differences were statistically significant (p < 0.05). The nurse subset had lower pretest scores than the overall sample, with average confidence scores ranging from 2.9 to 3.4. This subset also had the same or slightly lower post-test scores, with average confidence scores ranging from 3.8 to 4.2. Among the nurse subset, pre- to post-test gains in mean confidence scores were statistically significant across all modules (p < 0.0001). Nurses did not differ from non-nurses on confidence gains for any module at a statistically significant level.

In the general evaluation following completion of the course, 93% of learners agreed (4) or strongly agreed (5) that the training content provided the skills and resources needed to develop and sustain navigation and survivorship programs. A majority of participants agreed that the training module content enhanced their knowledge base (95%), provided new applicable strategies and skills (93%), and was useful and relevant to their professional development (92%). Most respondents (88%) intended to implement newly learned strategies, skills, and information into future program planning. However, half of participants (51%) reported needing additional information before being able to implement knowledge gains.

Review of the qualitative responses indicated that participants appreciated the training: “This is an incredibly in-depth training, and I found it to be exceptionally helpful as I start a new oncology nurse navigation program.” Although participants were not asked why they enrolled in the training, a few responses mentioned new programs or initiatives spurring a need for skill development. In addition, responses revealed that training content addressed an information gap: “[A]s a non-business clinician, I found it extremely helpful to be able to talk the language of administrators [who] provide funding for programs and to be able to make a compelling case for important initiatives.” Participants particularly appreciated that the training was free, self-directed online, and interactive. Several comments identified issues with the training, such as technical challenges with the online platform, broken hyperlinks, and narration that was too fast. Feedback on whether the training was too basic or on par for an introductory course was conflicting.

Discussion

Evaluation of data from the Executive Training indicates that the course has reached a geographically and professionally diverse, yet demographically homogenous, group of learners. Findings from the general evaluation and qualitative responses from participants supported Kirkpatrick’s first evaluation level of learner reactions. High general evaluation scores indicated that most participants found the training to be useful and relevant, with qualitative comments describing the course as helpful and excellent, supporting the belief that many participants had positive reactions to the training. In addition, responses expressing appreciation for the Executive Training’s interactive components suggests that this type of teaching strategy successfully engages learners. Although some participants were unhappy with technical issues and narration speed, staff at GW responded to this feedback by correcting noted issues in the online system to improve the user experience and reduce hindrances to learning.

Learning, the second level of Kirkpatrick’s model, was assessed by comparing pre- and post-test assessments. The finding that post-test confidence scores were higher on learning objectives for all modules suggests that the Executive Training course is effective in increasing learner confidence with program planning, evaluation, and sustainment. The training was effective in increasing confidence for the interprofessional sample as a whole and among nurses particularly, indicating wide applicability and the opportunity for broad dissemination to improve practice. However, the training was not effective in increasing participants’ confidence in identifying funding sources for navigation and survivorship care programs. Because funding is a persistent issue for supportive care services across many healthcare settings, this finding was not surprising. Additional research is warranted on how to appropriately fund navigation and survivorship care programs to support cost-effective, high-quality health care.

Although Kirkpatrick’s third and fourth levels (behavior and results, respectively) were not directly assessed, the majority of participants reported that the Executive Training course provided relevant skills and knowledge that can be applied in the practice setting. However, roughly half of participants believed that they required additional information prior to implementing a program, suggesting the need for more advanced training or directed coaching. Specific information was not reported, but the following suggestions were made in the open-response comments: study guides for patient navigator certification, information on the comparative effectiveness of different navigation models, additional detail on how to create job descriptions, further explanation on distinguishing mission and vision statements, additional detail on logic model components, and more time dedicated to program funding and evaluation. Responses also indicated that additional practical examples demonstrating the application of the modules would be valuable to future learners. To address these suggestions, the GW Cancer Center developed a standalone guide, Advancing the Field of Cancer Patient Navigation: A Toolkit for Comprehensive Cancer Control Professionals (http://bit.ly/PNPSEGuide), which is linked to the online training course. This toolkit can be used to educate and train patient navigators, provide technical assistance to coalition members, build navigation networks, and identify policy approaches to sustain patient navigation programs. Finally, participant demographics indicate that targeted promotion to underrepresented professions, such as physicians, physician assistants, and learners practicing in rural settings, may be warranted.

Limitations

The sample was self-selected and, therefore, may represent healthcare professionals who are particularly motivated to learn, involved in professional organizations, and more comfortable with technology. Because learner diversity was limited in terms of gender, racial, and ethnic demographics, these findings cannot be generalized. The evaluation tool used to assess learning outcomes was developed internally to assess confidence changes based on content-specific learning objectives, so the measure was not psychometrically validated.

Although self-efficacy is an important precursor to behavior, the training evaluation was based solely on participants’ self-reported confidence without measured knowledge questions or follow-up assessments on abilities and application in practice (Strecher, Devellis, Becker, & Rosenstock, 1986). The source, depth, and content of past trainings that were completed by participants were not collected. Information on specific degrees and levels of education were not systematically collected from the sample. These omissions limit understanding of participants’ baseline knowledge. In addition, the evaluation took a static fragment of data while enrollment and course progress were ongoing; therefore, it cannot be determined whether participants who had not completed the training by the time of the evaluation intended to continue or dropped out because of barriers to participation. Finally, an experimental design was not used, and no comparison groups could serve as counterfactuals.

Implications for Nursing

Despite the growth of navigation and survivorship care services for patients with cancer, healthcare professionals tasked with leading program implementation may lack the necessary skill set to manage and sustain such programs. In this interprofessional sample, most participants denied having past exposure to the topic areas addressed in the Executive Training course. The majority of participants rated their confidence as neutral on pretest module learning objectives. Stratified analyses revealed that nurses reported lower confidence at baseline than non-nurses in skills, such as creating a mission and vision statement, developing a logic model, identifying program costs, developing program budgets, and drafting a business plan. Nurses also reported statistically significant increases in confidence on these topic areas after completing training content and had learning gains that were similar to their non-nurse peers. Because the level of education for nurses and non-nurses was not systematically assessed, the reason for baseline differences is unclear. However, the findings of this study suggest an opportunity in nursing education for teaching practical skills on demonstrating program value.

Nurses are an important component in shaping oncology navigation and cancer survivorship clinical practice. According to the ANA (2015), resource utilization is a standard of professional performance in which nurses use appropriate resources to coordinate evidence-based practices that are safe and cost-effective. Previous studies recognize that nurses may lack the education background in how to address financial issues or assist with strategic planning, resulting in the marginalization of the nursing perspectives in organizational decisions (Muller & Karsten, 2012; Saxe-Braithwaite, 2003). To secure administrative support for patient-centered services, it is important that nursing leaders have the ability to strategically plan programs, establish metrics that rigorously demonstrate value, and communicate with financial savvy (Grant, Economou, Ferrell, & Uman, 2012; Lubejko et al., 2017; Rishel, 2014; Strusowski et al., 2017).

In qualitative feedback, some participants expressed that the financial content presented in module 5 of the Executive Training course was not directly relevant to their roles. Nurses working in navigation and survivorship may not have the responsibility to secure funding for their programs; however, Rishel (2014) suggests that all nurses, not only those in senior leadership roles, should have an understanding and appreciation of evaluation and financial measures involved in organizational decision making. Nurses who document and track patient information or communicate needs for resources are essential to overall program evaluation and sustainability, even if they are not directly responsible for budgetary concerns.

Nurses’ educational backgrounds may vary depending on their degree level and when or where they were educated. For learners who have been previously educated on program planning, evaluation, and sustainability, the Executive Training course may build on baseline knowledge by providing additional practical details and content-specific examples. For those with no past exposure to these topic areas, the course provides an intensive practice-based introduction. The Executive Training course serves as a resource for nurses and other healthcare professionals who are expected to learn on the job.

Conclusion

The Executive Training course is an efficient course that can improve confidence for survivorship and navigation program development and evaluation. Online learning platforms can be effective in reaching a wide range of interprofessional learners across diverse geographic and practice settings. Content and skills that are presented in the Executive Training course can address a gap in nursing education on survivorship and navigation programs for patients with cancer. The Executive Training course can continue to have an impact through additional dissemination and targeting to a variety of healthcare professionals.

About the Author(s)

Serena Phillips, RN, MPH, is a research associate, Aubrey V.K. Villalobos, MPH, MEd, is the director of cancer control and health equity, and Mandi Pratt-Chapman, MA, is the associate center director, all in the Institute for Patient-Centered Initiatives and Health Equity at the George Washington University Cancer Center in Washington, DC. This research was funded by a cooperative agreement (1U38DP004972-04) from the Centers for Disease Control and Prevention. Villalobos contributed to the conceptualization and design and completed the data collection. Phillips provided statistical support. All authors provided the analysis and contributed to the manuscript preparation. Pratt-Chapman can be reached at mandi@gwu.edu, with copy to ONFEditor@ons.org. (Submitted January 2019. Accepted March 6, 2019.)

References

American Cancer Society. (2018). American Cancer Society survivorship care guidelines. Retrieved from https://www.cancer.org/health-care-professionals/american-cancer-societ…

American College of Surgeons. (2012). Cancer program standards 2012: Ensuring patient-centered care [v.1.2.1]. Retrieved from https://www.facs.org/~/media/files/quality%20programs/cancer/coc/progra…

American Nurses Association. (2015). Nursing: Scope and standards of practice (3rd ed.). Silver Spring, MD: Author.

American Nurses Association. (n.d.) The nursing process. Retrieved from https://www.nursingworld.org/practice-policy/workforce/what-is-nursing/…

American Society for Clinical Oncology. (n.d.). Guidelines on survivorship care. Retrieved from https://www.asco.org/practice-guidelines/cancer-care-initiatives/preven…

Centers for Disease Control and Prevention. (2013). Roadmap for state program planning. Retrieved from https://www.cdc.gov/dhdsp/programs/spha/roadmap/index.htm

DeGroff, A., Coa, K., Morrissey, K.G., Rohan, E., & Slotman, B. (2014). Key considerations in designing a patient navigation program for colorectal cancer screening. Health Promotion Practice, 15, 483–495. https://doi.org/10.1177/1524839913513587

Freeman, H.P. (2012). The origin, evolution, and principles of patient navigation. Cancer Epidemiology, Biomarkers and Prevention, 21, 1614–1617. https://doi.org/10.1158/1055-9965.EPI-12-0982

Grant, M., Economou, D., Ferrell, B., & Uman, G. (2012). Educating health care professionals to provide institutional changes in cancer survivorship care. Journal of Cancer Education, 27, 226–232. https://doi.org/10.1007/s13187-012-0314-7

Halpern, M.T., Viswanathan, M., Evans, T.S., Birken, S.A., Basch, E., & Mayer, D.K. (2015). Models of cancer survivorship care: Overview and summary of current evidence. Journal of Oncology Practice, 11, e19–e27. https://doi.org/10.1200/JOP.2014.001403

Hewitt, M., Greenfield, S., & Stovall, E. (Eds.). (2006). From cancer patient to cancer survivor: Lost in transition. Washington, DC: National Academies Press.

Jefford, M., Kinnane, N., Howell, P., Nolte, L., Galetakis, S., Bruce Mann, G., . . . Whitfield, K. (2015). Implementing novel models of posttreatment care for cancer survivors: Enablers, challenges and recommendations. Asia-Pacific Journal of Clinical Oncology, 11, 319–327. https://doi.org/10.1111/ajco.12406

Kirkpatrick, D.L., & Kirkpatrick, J.D. (2006). Evaluating training programs: The four levels (3rd ed.). San Francisco, CA: Berrett-Koehler.

Kirsch, L.J., Patterson, A., & Lipscomb, J. (2015). The state of cancer survivorship programming in Commission on Cancer-accredited hospitals in Georgia. Journal of Cancer Survivorship, 9, 80–106. https://doi.org/10.1007/s11764-014-0391-1

Lubejko, B.G., Bellfield, S., Kahn, E., Lee, C., Peterson, N., Rose, T., . . . McCorkle, M. (2017). Oncology nurse navigation: Results of the 2016 role delineation study. Clinical Journal of Oncology Nursing, 21, 43–50. https://doi.org/10.1188/17.CJON.43-50

McCabe, M.S. (2012). Cancer survivorship: We’ve only just begun. Journal of the National Comprehensive Cancer Center Network, 10, 1772–1774.

Mead, H., Pratt-Chapman, M., Gianattasio, K., Cleary, S., & Gerstein, M. (2017). Identifying models of cancer survivorship care [Abstract 1]. Journal of Clinical Oncology, 35(Suppl.), 1. https://doi.org/10.1200/JCO.2017.35.5_suppl.1

Miller, K.D., Nogueira, L., Mariotto, A.B., Rowland, J.H., Yabroff, K.R., Alfano, C.M., . . . Siegel, R. (2019). Cancer treatment and survivorship statistics, 2019. CA: A Cancer Journal for Clinicians. Advance online publication. https://doi.org/10.3322/caac.21565

Muller, R., & Karsten, M. (2012). Do you speak finance? Nursing Management, 43(3), 52–54.

National Comprehensive Cancer Network. (2019). NCCN Clinical Practice Guidelines in Oncology (NCCN Guidelines®): Survivorship [v.2.2019]. Retrieved from https://www.nccn.org/professionals/physician_gls/pdf/survivorship.pdf

Norman, G. (2010). Likert scales, levels of measurement and the “laws” of statistics. Advances in Health Sciences Education, 15, 625–632. https://doi.org/10.1007/s10459-010-9222-y

Reio, T.G., Rocco, T.S., Smith, D.H., & Chang, E. (2017). A critique of Kirkpatrick’s Evaluation Model. New Horizons in Adult Education and Human Resource Development, 29, 35–53. https://doi.org/10.1002/nha3.20178

Rishel, C.J. (2014). Financial savvy: The value of business acumen in oncology nursing. Oncology Nursing Forum, 41, 324–326. https://doi.org/10.1188/14.ONF.324-326

Rosenzweig, M.Q., Kota, K., & van Londen, G. (2017). Interprofessional management of cancer survivorship: New models of care. Seminars in Oncology Nursing, 33, 449–458. https://doi.org/10.1016/j.soncn.2017.08.007

Salz, T., & Baxi, S. (2016). Moving survivorship care plans forward: Focus on care coordination. Cancer Medicine, 5, 1717–1722. https://doi.org/10.1002/cam4.733

Saxe-Braithwaite, M. (2003). Nursing entrepreneurship: Instilling business acumen into nursing healthcare leadership. Nursing Leadership, 16(3), 40–42.

Spears, J.A, Craft, M., & White, S. (2017). Outcomes of cancer survivorship care provided by advanced practice RNs compared to other models of care: A systematic review [Online exclusive]. Oncology Nursing Forum, 44, E34–E41. https://doi.org/10.1188/17.ONF.E34-E41

Steinberg, M.L., Fremont, A., Khan, D.C., Huang, D., Knapp, H., Karaman, D., . . . Streeter, O.E., Jr. (2006). Lay patient navigator program implementation for equal access to cancer care and clinical trials: Essential steps and initial challenges. Cancer, 107, 2669–2677. https://doi.org/10.1002/cncr.22319

Strecher, V.J., Devellis, B.M., Becker, M.H., & Rosenstock, I.M. (1986). The role of self-efficacy in achieving health behavior change. Health Education Quarterly, 13, 73–92. https://doi.org/10.1177/109019818601300108

Strusowski, T., Sein, E., Johnston, D., Gentry, S., Bellomo, C., Brown, E., . . . Messier, N. (2017). Standardized evidence-based oncology navigation metrics for all models: A powerful tool in assessing the value and impact of navigation programs. Journal of Oncology Navigation and Survivorship, 8, 220–237.

U.S. Department of Health and Human Services. (2019). MAP-IT: A guide to using Healthy People 2020 in your community. Retrieved from https://www.healthypeople.gov/2020/tools-and-resources/Program-Planning

Wells, K.J., Meade, C.D., Calcano, E., Lee, J.H., Rivers, D., & Roetzheim, R.G. (2011). Innovative approaches to reducing cancer health disparities: The Moffitt Cancer Center Patient Navigator Research Program. Journal of Cancer Education, 26, 649–657. https://doi.org/10.1007/s13187-011-0238-7